Behave From the Void: Unsupervised Active Pre-training paper

The paper, Behave From the Void: Unsupervised Active Pre-training, proposed a new method for pretraining RL agents, APT , which is claimed to beat all baselines on DMControl Suite. As the abstract pointed out: the key novel idea is to explore the environment by maximizing a non-parametric entropy computed in a abstract representation space. This blog will take a look at the motivation, method and explanation of the paper, as well as compare it with the other AAAI paper.

Pre-training RL

Pre-training RL, as a relatively independent branch of the RL family, has certain experiment setting. Agents are trained in a reward-free environment for a long time and then put into testing environments to maximize the sum of expected future reward in zero-shot or few shot manner. There is a exact two-phase process which is also reflected by the code implementation. As reported in the paper, SOTA methods maximize the mutual information between policy-conditioning variable $w$ and the behavior induced by the policy in terms of state visitation $s$.

$$max I(s;w)=max H(w) - H(w|s)$$

To be honest, I’m a little confused about the line above. Let’s look at $w$ and $s$. $w$ is sampled from a fixed distribution in practice as in DIAYN, which is a paper focusing on hierarchical RL. There is also an alternative form of the objective:

$$max I(s;w)=max H(s) - H(s|w)$$

Idea and Method

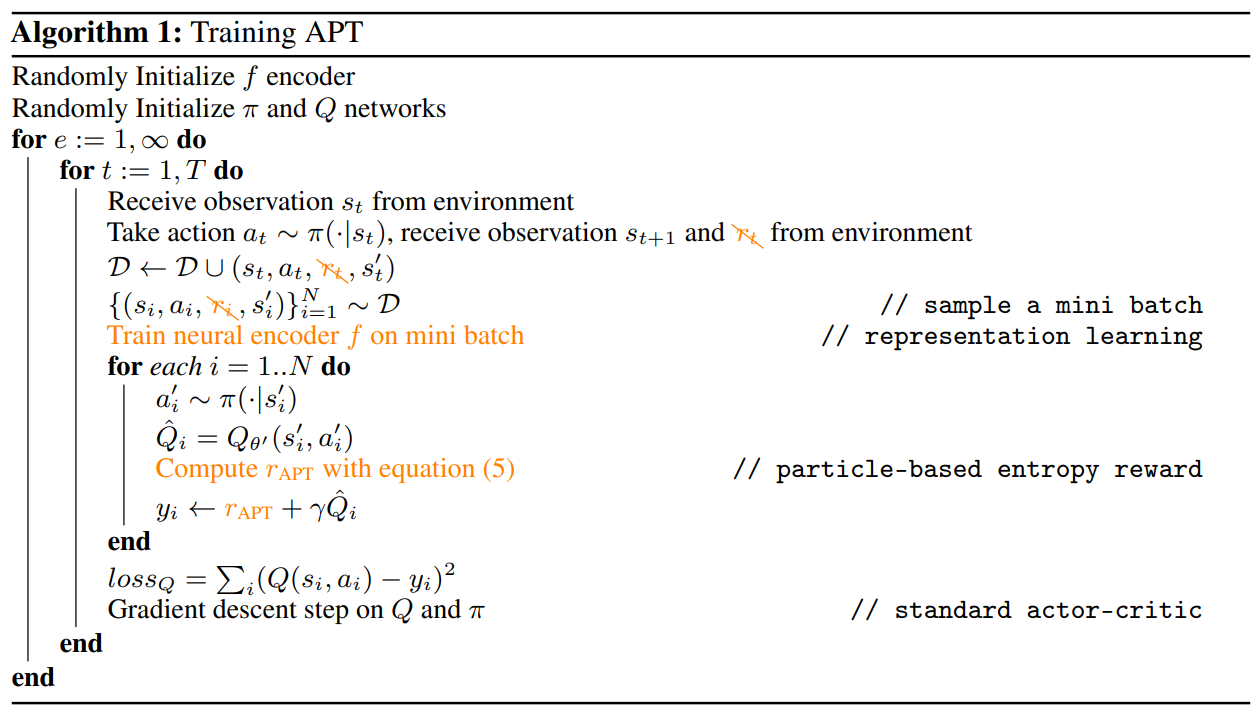

The idea of APT is to define a intrinsic reward that encourage agent to maximize entropy in representation space. There are 2 steps to do so: first learn a mapping from $R^{ns}$ to $R^{nz}$, second proposed a particle-based nonparametric approach to maximize the entropy.

Suppose we have now leant a representation function $f_\theta$ for states. Authors used a entropy estimator that measure the sparsity of distribution by considering the distance between each sample point and its k-nearest neighbor. Assuming we have n data points ${z_i}^n_{i=1}$, the approximation is:

$$H_{particle}(z)=-\frac{1}{n} \sum^{n}_{i=1}log\frac{k}{nv^k_i}+b(k)$$

where $b(k)$ is a bias correction depends only on $k$, and $v^k_i$ is the volume of hypersphere with radius $||z_i - z_i^{(k)}||$ between $z$ and its k-th nearest neighbor. By bring into the hypersphere’s volume, we see

$$H_{particle}(z)\propto \sum^{n}_{i=1}log||z_i - z_i^{(k)}||^{nz}$$

In practice, the authors used the average distance for all nearest k neighbors in the equation above. And we can then design a reward function, as we view the entropy as an expected reward with reward function being $r(z_i)=log(c+\frac{1}{k}\sum_{z^{(j)}\in N_K(z=f_\theta(s))}||f_\theta(s)-z^{(j)}||^{nz})$ for each particle $z_i$.

$$r(s,a,s’)=log(c+\frac{1}{k}\sum_{z^{(j)}\in N_K(z=f_\theta(s))}||f_\theta(s)-z^{(j)}||^{nz})$$

Compared with the entropy part, the representation function is relatively simple: a common SimCLR representation. And then we have a algorithm:

Comparison

The paper, Generalizing Reinforcement Learning through Fusing Self-Supervised Learning into Intrinsic Motivation, is quite similar to APT. They both have designed intrinsic reward and representation learning component. However, they just look similar. They are different in several ways:

- Setting: generalization vs pre-training

- Network architecture

- Emphasize on different part