Generalizable Imitation Learning from Observation via Inferring Goal Proximity is a NIPS2021 paper which focuses on the generalization problem of Learning from Demonstration(LfO). The idea of the paper is quite straightforward without much mathematical explanations. In this blog I will show the high-level idea and experiment setting of the paper.

Preliminaries: LfO and “Goal” idea

LfO is an imitation learning setting, where we cannot access the action information of experts’ demonstrations. In traditional imitation learning, like BC or GAIL, we have sequences of expert’s observations and actions $(s_0,a_0,s_1,a_1,\dots,s_T)$. In LfO setting, we only have $(s_0,s_1,s_2,\dots,s_T)$. This makes imitating harder because we have to infer, by ourselves, what the action is. Of course LfO setting may be applied to a broader real-life scenario. Goal-conditioned RL is anaother heated field today where RL agents aims to reach certain goal by reducing the distance from current state to “goal”.

Generalizable LfO via Inferring Goal Proximity

The authors raise several issues that reduce the generalization of LfO: 1) Adversarial Imitation Learning “tends to find spurious associations between task-irrelevant features and expert/agent labels” 2) Current reward recovered by AIL contains less information of task structure, which is essential to generalize to unseen states. To overcome these problems, the authors changed the reward in GAIL framework by utilizing goal proximity, “which estimates temporal distance to the goal (i.e. number of actions required to reach the goal)”.

The core design of the paper is goal proximity function : $f:S\rightarrow [0,1]$ that predicts goal proximity of a state, “which is a discounted value based on the temporal distance to the goal (i.e. inversely proportional to the number of actions required to reach the goal)”. The goal proximity used in the paper is $f(s_t)=\delta^{(T_i-t)},\delta \in [0,1]$. We can say this estimate how far it is from current state to goal state. And the predictor of goal proximity $f_\phi$ is trained with loss function:

$$\mathcal{L}_{\phi} = E_{\pi^e_i \sim d^e,s_t\sim\tau^e_i}[f_{\phi(s_t)}-\delta^{T_i-t}]^2 + E_{\tau\sim \pi_{\theta},s_t\sim \tau}[f_{\phi(s_t)}]^2$$

We can see that the first term is a squared distance to encourage prediction. However, the second one is to utilize online agent experience to avoid overfitting to expert data. In this case, the goal proximity of agent is set to 0. This method may results in a case that, when the agent is well-trained, it might still get a low goal proximity prediction. The authors eased this problem by early stopping and learning rate decay.

As for the policy, the reward recovered, or say constructed to be more clear, is

$$R_\phi(s_t,s_t+1)=f_{\phi(s_t+1)} - f_{\phi(s_t)}$$

The policy is trained to maximize

$$E_{(s_0,\dots,s_{T_i})\sim \pi_{\theta}}[\sum^{T_i-1}_{0} \gamma^t R_{\phi} (s_t,s_{t+1})]$$

which is of off-policy style. The policy training still has several problems as goal proximity function does. “However, a policy trained with the proximity reward can sometimes acquire undesired behaviors by exploiting over-optimistic proximity predictions on states not seen in the expert demonstrations”. A modification to reward function is made to alleviate the problem: $$R_\phi(s_t,s_t+1)=f_\phi(s_t+1)-f_\phi(s_t)+\lambda\cdot U_\phi(s_{t+1})$$ where $U$ models uncertainty in goal proximity prediction as the disagreement of an ensemble of proximity functions by computing the standard deviation of their outputs.

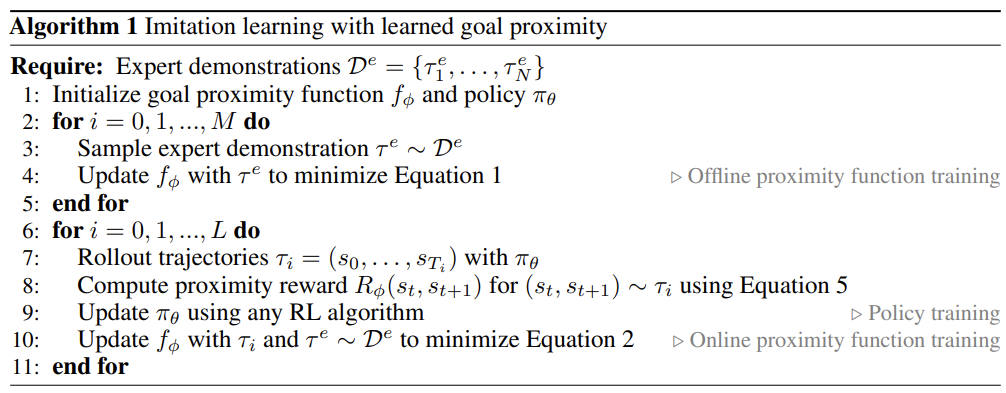

The algorithm is as follows:

In the experiment part, we can see several settings of LfO generalization. It would be better presented in paper rather than in this blog.

After all, this paper shows strong and solid experiment result, but with many intuition-guided design.